The Deep Learning Debugging & Explainability Platform

Tensorleap helps AI teams understand, validate, and improve their models by analyzing how neural networks interpret data and why they fail.

From visibility to action

Tensorleap’s shared analytical layer links activations, data, and semantics, enabling concept-level inspection for any model.

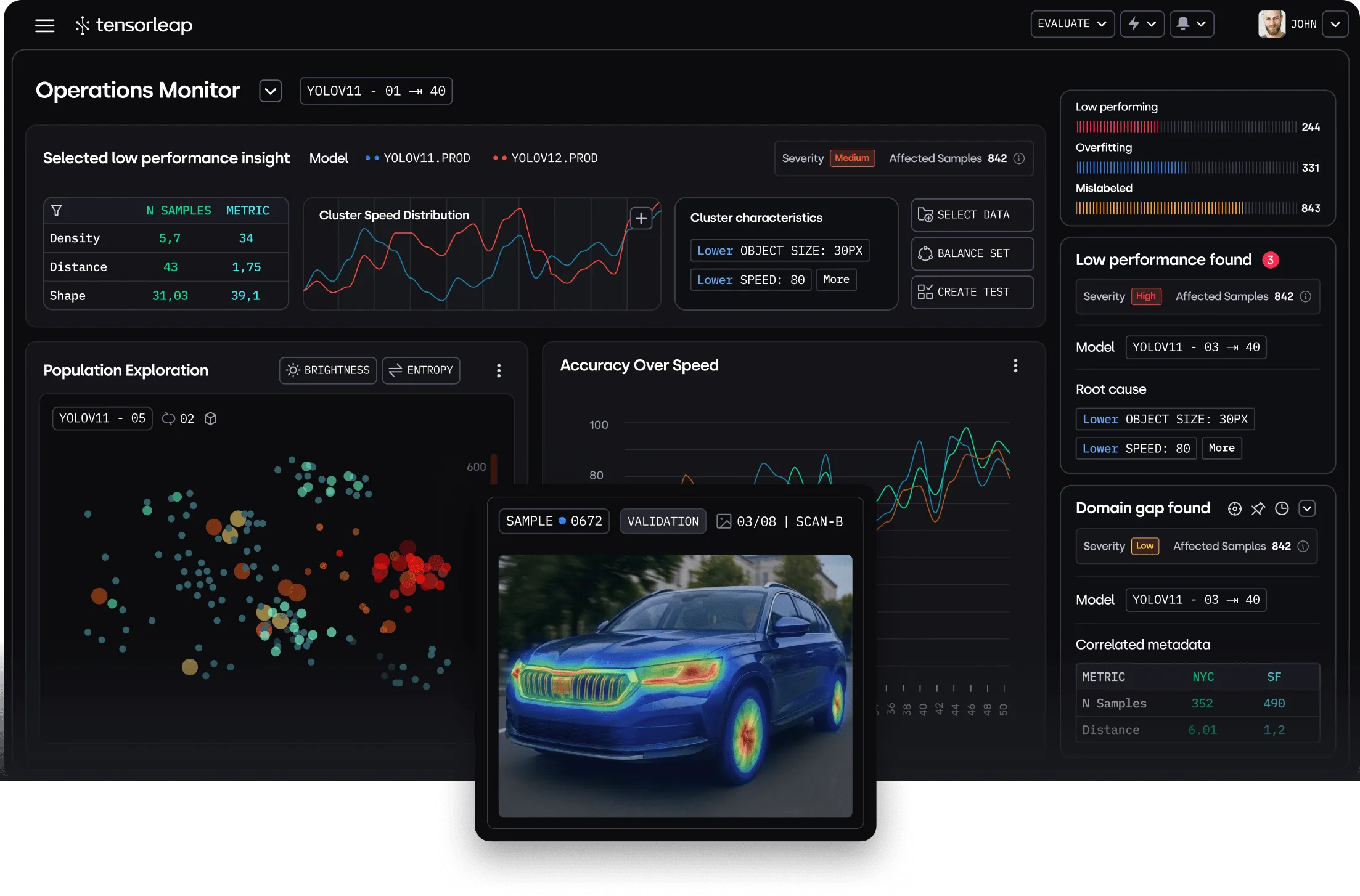

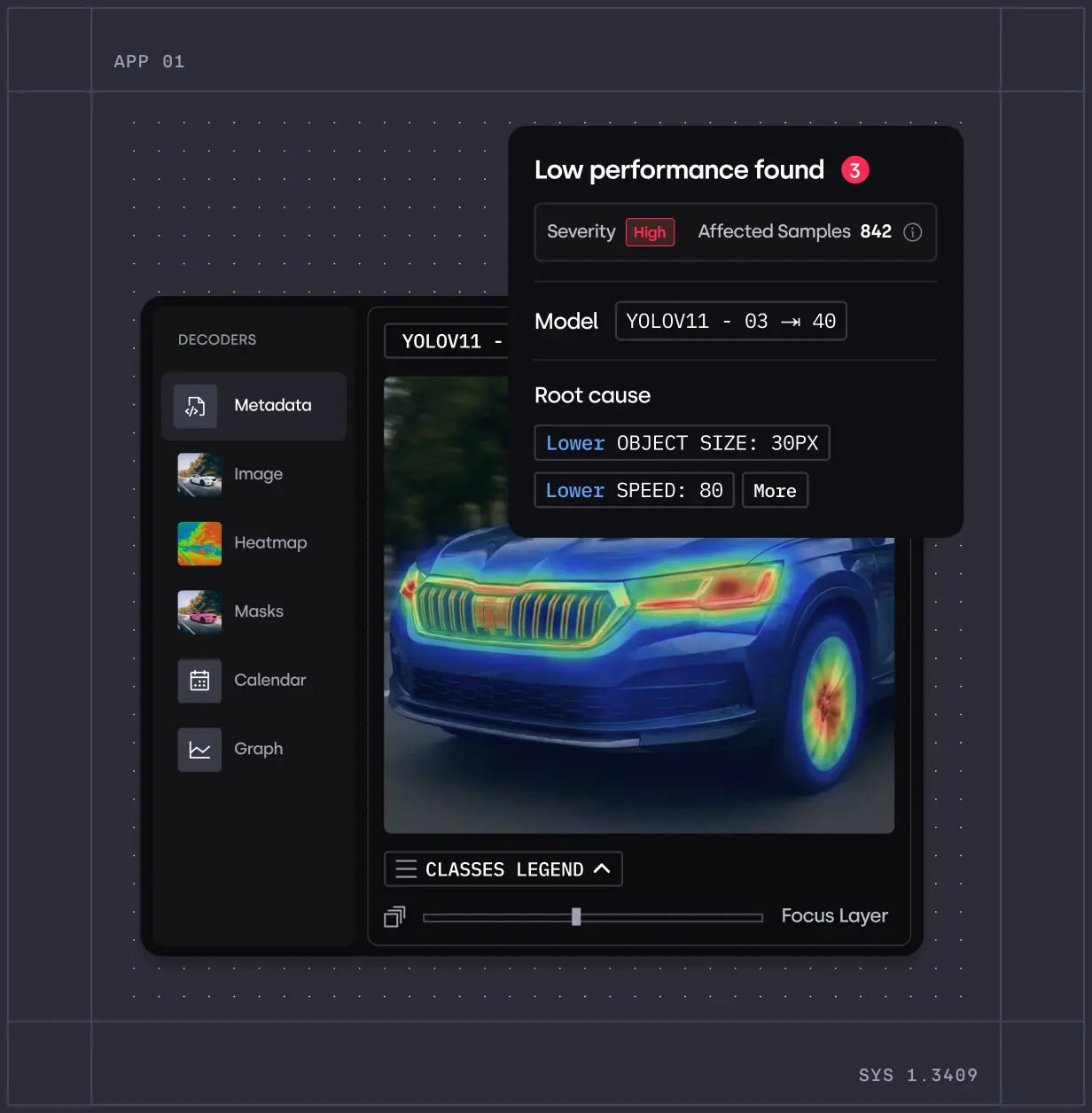

Tensorleap automatically surfaces and ranks semantic subgroups where your model underperforms, giving you the tools to fix them through targeted insights and guided improvements.

Automatic detection and ranking aggressors by severity

Characterizing failure patterns to accelerate root-cause analysis

Representative samples & heatmaps for visual explanations

Retrieval of similar unlabeled samples for targeted labeling

Reusable tests to track regressions across model versions

.webp)

Tensorleap identifies the concepts and features that cause models to behave differently across domains, including gaps between synthetic and real-world data. By comparing activations and metadata, it reveals how context, sensor, or environment shifts alter model focus, helping teams close the gaps that limit generalization.

Detect domain-specific concepts that impact model performance

Compare activations and metadata across datasets and environments

Guide targeted synthetic data generation to balance domain gaps

Tensorleap analyzes unlabeled datasets by interpreting the model’s internal behavior to estimate confidence and identify likely errors.

When labeling is costly or datasets are too large to annotate, it exposes low-quality or uncertain samples first.

Estimate confidence and error likelihood across unlabeled samples

Identify uncertain or anomalous data for focused review

Prioritize labeling where it drives the most impact

.webp)

.webp)

Tensorleap helps data teams acquire, label, and structure the right data, using model-driven insights to eliminate guesswork, reduce waste, and strengthen training effectiveness.

Identify missing concepts your model struggles with to guide data acquisition

Reveal underrepresented cases to focus labeling efforts

Detect mislabeling that degrades training quality

Generate balanced dataset splits and weighted training sets to reduce leakage and improve generalization

From model to insight,

in three steps

Upload your trained model and lightweight integration code defining how data is preprocessed and passed through the model for analysis.

The platform analyzes internal activations and latent-space relationships, to uncover semantic patterns, failure clusters, and domain shifts.

Debug, optimize, and validate your model visually without changing your training workflow.